This article was originally published on Momentum.

There is growing evidence and concern that the algorithms and data underpinning AI can produce bias and ethical injustice. Associate Professor Sarah Kelly discusses the governance and data management considerations necessary to ensuring the ethical implementation of AI.

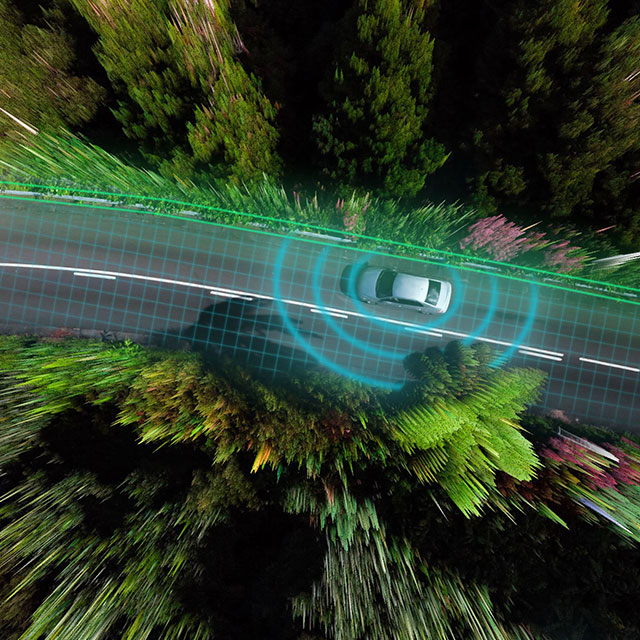

Imagine you’re responsible for programming an autonomous vehicle and are faced with a moral dilemma. You need to write the algorithm that makes decisions for the car in anticipation of a time when it will be faced with different decisions, each with different moral implications.

For example, a runner trips and falls onto the road, but the only option for the autonomous car to avoid hitting them is to swerve into oncoming traffic. Should you tell the car to continue on its original path, harming and probably killing the person? Or change its path to protect its inhabitants? Even though there’s no way to predict what this action will lead to.

Which is the more ethical option? Or, more simply: what is the right thing to do?